I’ve seen the hottest of terrible hot-takes over the last couple of days about Apple’s announcement this past Tuesday (although leaked a few days before) that their new flagship iPhone, the iPhone X, will use a biometric system involving facial identification as the secure authentication mechanism for the phone. No more TouchID, which uses your fingerprint as your “key” to unlock the phone, we are now in the world of FaceID.

Let’s get this out of the way early in this essay: biometrics are for convenience, passcodes are for security. This doesn’t mean that biometrics aren’t secure, but they are secure in a different way, against different threats, for different reasons. The swap of FaceID for TouchID does nothing to lessen the security of your device, nor does it somehow given law enforcement or government actors increased magical access to the information on your phone.

You’d have thought, from the crazed reactions I’ve seen on Twitter and in the media, that Apple had somehow neglected to think of all of the most obvious ways this can be cheated.

FaceID could potentially pose some pretty serious security problems https://t.co/xnHx6Lob1C

— WIRED (@WIRED) September 13, 2017

So how worried should we be about Apple’s Face ID https://t.co/IA9bEbyv1D

— DO ENGINEERING (@doengineering) September 15, 2017

Apple’s FaceID Could Be a Powerful Tool for Mass Spying https://t.co/Xfy81NkDcx pic.twitter.com/Zg9bSbxaiy

— Technology Bits (@Technology_Bits) September 15, 2017

and my personal favorite

With FaceID, cops can just point your phone at your face while they have you in handcuffs then look through your phone without a warrant.

— Jerrah Mormont (@BostonJerry) September 12, 2017

The Wired article above, by Jake Laperuque, includes the breathless passage:

And this could in theory make Apple an irresistible target for a new type of mass surveillance order. The government could issue an order to Apple with a set of targets and instructions to scan iPhones, iPads, and Macs to search for specific targets based on FaceID, and then provide the government with those targets’ location based on the GPS data of devices’ that receive a match.

If we’re throwing out possibilities…any smartphone could do that right now based on photo libraries. If there was a legal order to do so. And IF the technology company in question (either Google or Apple, if we’re sticking to mobile phones as the vector) did indeed build that functionality (which would take a long, long time) and then did employ it on their millions and millions of phones (also: long time), it would involve an enormous amount of engineering resources. Coordination of the “real” target vs family members who just happened to have photos on their phones of Target X should be fairly easy to do via behavioral profiling and secondary image analysis.

But that, like the FaceID supposition above, is bonkers to believe. If anything, FaceID is more secure in every way than the equivalent attack via standard photo libraries. If a nation-state with the power to compel Apple or Google into doing something this complicated and strange really wanted to know where you were…they wouldn’t need Apple or Google’s help to do so.

The truth of the matter is that FaceID is no less secure than the systems we have now on Apple devices (here I am not including Android devices as there are simply too many hardware makers to be certain of the security). TouchID, the fingerprint authentication process that is available for use on every current iPhone (and the new iPhone 8 and 8 plus), every current iPad, and multiple models of MacBook, uses your fingerprint as the “key” to a hash that is stored on a hardware chip known as the Secure Enclave on the phone. When you place your finger on the TouchID sensor, it isn’t taking a picture of your print, or storing your print in any way. The information that is stored in the Secure Enclave isn’t retrievable by anything except your phone. Your fingerprints aren’t being stored at Apple Headquarters on some server. There is no “master database” of the fingerprints of all iPhone users. The authentication is entirely local, as witnessed by the fact that you have to enroll your print on every iOs device separately.

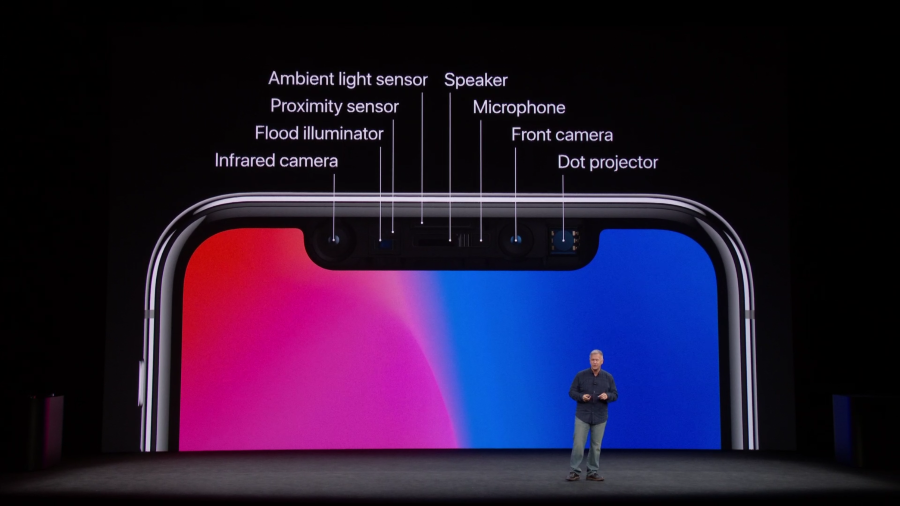

FaceID appears to be exactly the same setup, with exactly the same security oversight as TouchID. It’s entirely local to the phone, and all of the information (a “hash” of information about your face…it’s really not fair to call it a “picture”) is stored on the Secure Enclave within the iPhone. We haven’t seen the full security report on FaceID and iOS 11 yet, but I am certain it will be available soon (iOS 10 and TouchID is available here). Given the other well-considered aspects of security on iOS 11 that we have seen, such as requiring a passcode before trusting an untrusted computer, I am confident that iOS 11 and FaceID will be at least as secure as their previous iterations.

Is it possible that Apple, the most valuable technology company in the world in large part due to their ability to develop hardware and software in concert with each other, completely missed something in making FaceID? Of course it’s possible. But all of the ways that technology of this sort has failed from other companies (racial bias, poor security models, data leakage) have not yet been true for TouchID. I do not believe they will be true for FaceID either.

Even setting aside the purely technical aspects, legally there is no difference in the risks of using FaceID over using TouchID. In the tweet above about police holding your phone up to your face to unlock it, it would be important to note that they can compel a fingerprint now. It is entirely legal (with a lot of “if”s and “but”s) for a police officer to force your finger onto your phone to unlock it. No warrant is necessary for that to happen. FaceID is exactly the same, as far as legal allowances and burden of proof and such, as TouchID is now. In the case of preventing law enforcement access to your phone, the only answer is a strong password and your refusal to give it to someone.

It isn’t clear to me if FaceID is going to be a good user experience…without devices in user’s hands, we have no idea. But the knee-jerk response that somehow Apple is building a massive catalog of faces is neither true, nor possible given the architectures of their hardware and software.

This isn’t to say that there isn’t some real danger somewhere:

Apple does FaceID fairly well—local data+secure enclave, etc. Not the issue. We’re building the infrastructure of authoritarianism. That is.

— Zeynep Tufekci (@zeynep) September 13, 2017

I think Zeynep has this (as most things) exactly right. This technical implementation is really quite good. The normalization of the technology in our culture may well not be…but this is why I am so vehement about defending this positive implementation as positive. Let Apple’s method of doing this be the baseline, the absolute minimum amount of care and thought that we will accept for a system that watches us. They are doing it well and thoughtfully, so let’s understand that and not let anyone else do it poorly. And for goodness sake don’t cry wolf when technologies understand their risks and are built securely. Because just like the story, when the real wolves show up, it will be that much harder for those of us paying attention to raise the alarm.

EDIT: After writing this entire thing, I found Troy Hunt’s excellent analysis, which says many of these same things in a much better way than I. Go read that if you want further explication of my take on this, as I agree with his essay entirely.