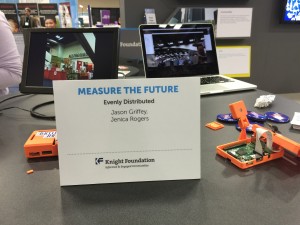

It’s been too long since a public update made its way out for Measure the Future. In light of the rapidly approaching ALA Annual 2016, here’s the current state of the Project….

The Good News

Enormous amounts of code has been written, and we have the most complicated parts nailed down. When we started on this Project, the idea of using microcomputers like the Edison to do the sort of computer vision (CV) analysis that we were asking was still pretty ambitious. We’ve worked steadily on getting the CV coding on the Edison done, and it’s a testament to our Scout developer, Clinton, that it’s as capable as it is.

The other major piece of the Project, the Mothership, was also challenging, although in a different way. Working through the design of the user interfaces and how we can make the process as easy as possible for users turned out to be more difficult than we’d imagined (isn’t it always?). Things like setting up new Scouts, how libraries will interact with the graphs and visualizations we’re making, and most importantly how we ensure that our security model is rock solid are really difficult problems, and our Mothership developer, Andromeda, has been amazing at seeing problems before they bite us and maneuvering to fix them before they are a problem.

But if I specified good news, you know there’s some bad news hiding behind it.

The Bad News

We’re delayed.

We have working pieces of the project, but we don’t have the whole just yet.

Despite enormous amounts of work, Measure the Future is behind schedule. The goal of the project, from the very beginning back in 2014, was to launch at ALA Annual 2016. While we are tantalizingly close, we are definitely not ready for public launch, and with ALA Annual 2016 now just a couple of weeks away, it seemed like the necessary time to admit to ourselves and the public that we’re going to miss that launch window.

As noted in the “Good News” section, the individual pieces are in place. However, before these are useful for libraries, the connection between them has to be bulletproof. We are working to make the setup and connection as automatic and robust as we possibly can, and as it turns out, networking is hard. Really hard. No, even harder than that.

We’re struggling with making this integration between the two sides automatic and bulletproof. There’s no doubt that in order for the Project to be useful to libraries, it has to be as easy as possible to implement, and we are far from easy at this point. We still have work to do.

I’m very unhappy that we’re missing our hoped-for ALA Annual 2016 launch date. There’s a lot of benefit of launching at Annual, and I’m very disappointed that we’re not going to hit that goal. But I’d rather not hit the date then release a product to the library world that isn’t usable by everyone.

Conclusion

Measure the Future is still launching, and doing so in 2016. But we’re going to take a little longer, test a little more thoroughly, get the hardware into the hands of our Alpha testers, and ensure that when we do release, it’s more than ready for libraries to use. We’ve also been able to make a deal with a Beta partner that will really test what we’re able to do, and we’re really excited about the possibilities on that front. This extra time also gives us an opportunity for additional partnerships and planning for getting the tool out to libraries. More news and announcements on that front in a month or so.

We’re going to make sure we give the library, museums, and other non-profits of the world a tool that reveals the invisible activity taking place in their spaces. And we’re going to do it this year. Jason will be attending ALA Annual in Orlando, and he’ll be talking about Measure the Future every chance he gets. We don’t have a booth (a booth without the product in hand seemed presumptive) but it’s easy to get in touch with Jason if you want to ask questions about the project. If you have questions, feel free to throw them his way.